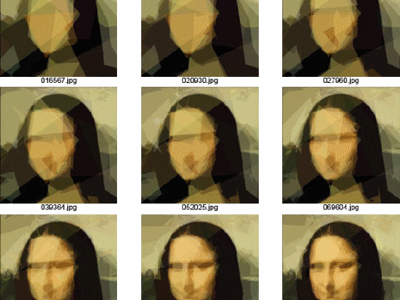

Nightmare AI.https://t.co/YPyxLL4kQQ

— Naghmeh Rezaei (@ruz_us) November 1, 2017

A cosmic multimessenger gold rush: what we're learning from the collision of neutron stars https://t.co/72Q7fe4QUK pic.twitter.com/Rqu5ZLAjIM

— Science Magazine (@sciencemagazine) October 20, 2017

Future of Sequencing and Data Storage. https://t.co/rUx5n2pgI6

— Naghmeh Rezaei (@ruz_us) October 13, 2017

How Crispr Could Snip Away Some of Humanity’s Worst Diseases https://t.co/g55xk6635h

— Naghmeh Rezaei (@ruz_us) May 7, 2017

Behind the scene team #plantR #NasaSpaceApps #SpaceAppsSV pic.twitter.com/i3kD6DR61c

— Naghmeh Rezaei (@ruz_us) April 30, 2017

Team #PlantR rocks #NasaSpaceApps #spaceAppsSV pic.twitter.com/UoFDsjZigg

— Naghmeh Rezaei (@ruz_us) April 30, 2017

Thrilled to be spending the weekend at #spaceapps. #spaceappssv pic.twitter.com/ny78i7t88H

— Naghmeh Rezaei (@ruz_us) April 29, 2017

Proud to be recipient of BSC travel award https://t.co/h9U637g9gh

— Naghmeh Rezaei (@ruz_us) February 17, 2017

Genetic Algorithm

One of the questions that has always been interested me in is how the process of “decision making” work. Us, humans, don’t act randomly and our decisions are based on a thought process inside our brains. What if a computer were to make a decision to get a certain result?

There are algorithms that evolve autonomously, called evolutionary algorithms.

The Genetic Algorithm (GA), is an example of them, that that evolves itself. The algorithm finds solutions to optimization and search problems. This evolutionary algorithm is used in large search spaces and inspired by natural selection. It is basically a trial and error process in which “the best” strategy” wins.

There are many amazing examples, here you can find two of them:

Constrained Optimization

Optimization, a selection of best value for a set of parameters, is an important problem in different fields of science. When the best possible answer is subject to some constraints, then it is not an easy problem to deal with. However, if the constraint is an equality relationship, “Lagrange Multiplier” can be used to turn it into an unconstrained problem. This method is introduced byJoseph-Louis Lagrange about 200 years ago.

Let’s consider the problem of optimizing a function with no constraint. One would say take the derivative and find its roots to get the extrema of the function. Finding a closed form equation for the maxima or minima of the function is not trivial if the function should follow certain constraints. Instead, Lagrange introduced a method to turn the problem into an unconstrained one: Lagrange multipliers:

Goal: optimize f(x,y), subject to: g(x,y)-c=0

Define Lagrangian as:

Consider contours of f which have a fixed f value while g(x,y)=c.

When the contour line of g intersects with f or cross the contour lines of f means while moving along the contour line the value of f can change so it is not an extremum. While if the contour line of g meets contour lines of f tangentially, the value of f is not changing and that point is a potential extremum. Mathematically it is equivalent to:

Solving the Lagrange equation gives the optimized constrained values for f: